Back to projects

Back to projects

Flexible Network Binarization with Layer-Wise Priority

- Date:07/10/18

- Team: He Wang, Yi Xu, Bingbing Ni, Lixue Zhuang, Hongteng Xu

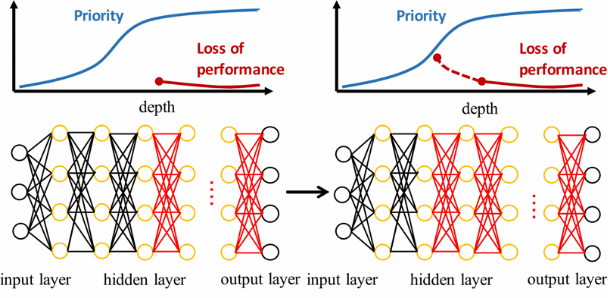

- Goal: To achieve a novel and flexible neural network binarization method by introducing the concept of layer-wise priority which binarizes parameters in inverse order of their layer depth.

Brief Introduction

The growing depth and size of deep neural network brings a great challenge for the deployment on mobile and embedded devices. Currently, a group of network compression methods are advanced to binarize real-valued networks. In particular, they utilize different iterative thresholding strategies to approximate the weights of real-valued networks with ±1 according to their signs. However, most of these methods binarize all parameters in networks simultaneously. Such a strategy suffers from two problems. Firstly, the approximation errors caused by binarization are dramatically aggregated through all layers and thus difficult to converge. Secondly, binarizing all parameters limits the flexibility of these methods on exploring a trade-off between the loss of performance and the compression ratio.

To solve these problems, we propose a novel and flexible neural network binarization method by introducing the concept of layer-wise priority which binarizes parameters in inverse order of their layer depth. In each training step, our method selects a specific network layer, minimizes the discrepancy between the original real-valued weight and it binary approximations. This layer-wise network binarization method is inspired by the block coordinate descent manner. During the binarization process, we can flexibly determine whether to continue binarizing the remaining floating-point layers and achieve the trade-off between the loss of performance and the compression ratio of network model.

In future work, we aim to develop more reasonable metric of priority and more effective control mechanism to achieve the best trade-off between compression ratio and performance.

Flexible layer-wise binarization algorithm: